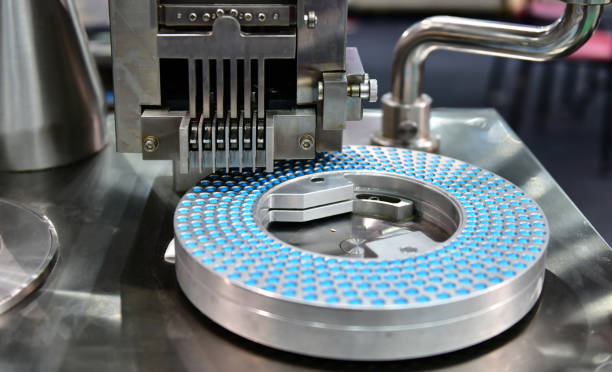

Pharmaceutical company improves OEE by 10% in 6 months by achieving maintenance excellence

An X-Ray View of Sensor Data

Large medical devices such as CAT scanners and magnetic resonance imaging systems are a major investment for doctors’ practices and hospitals. Unexpected breakdowns don’t just cause huge costs but also jeopardize patients’ medical care.

For manufacturers, this means many spare parts must be kept in stock permanently, resulting in high capital tie-up. If a device breaks down, the technicians have to take numerous spare parts with them to the customer on spec. And if the parts aren’t needed, they have to be thoroughly checked before they can be restocked.

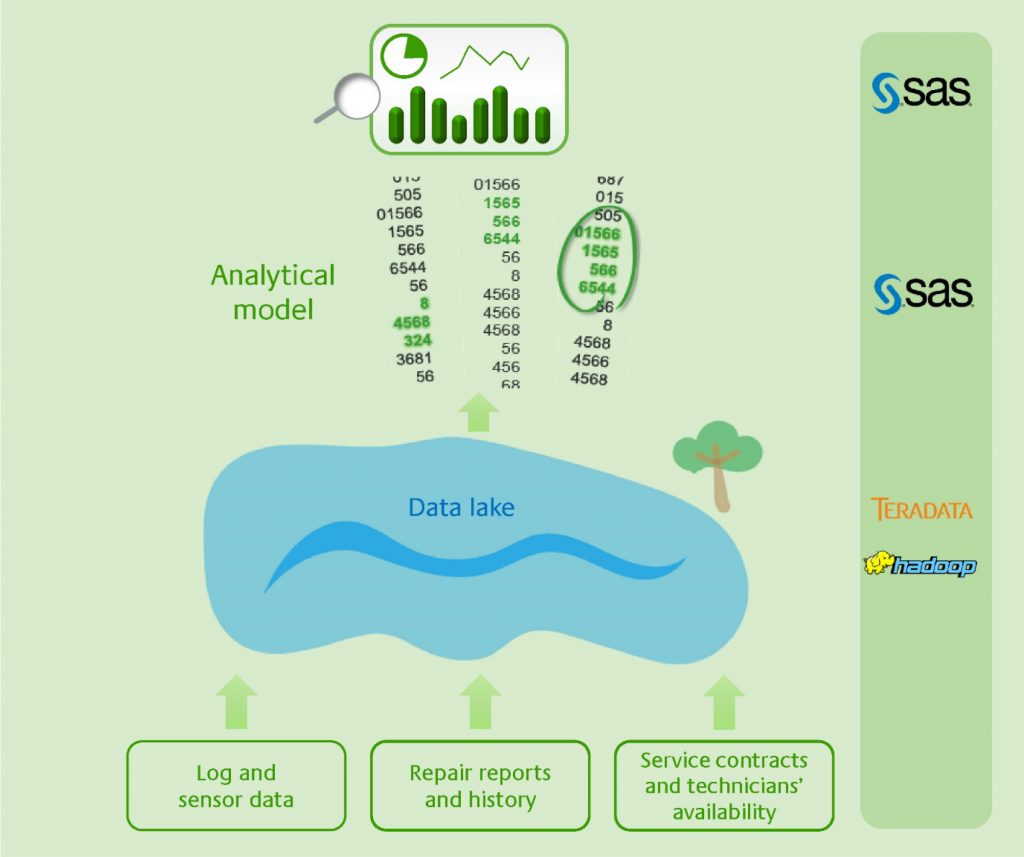

A German company decided to analyze the automatically transmitted sensor data centrally and – based on an analytical model – calculate just how probable it is that individual parts will break down.

The big data challenge is ever-present and has been discussed again and again over the past years. Data is lying idle in many companies without being efficiently used.

All the manufacturer’s large medical devices send log files with system-relevant status information to the respective development department every day. Yet, in the past, this data was only spot-checked and evaluated by experts manually.

The information could neither be used across departments, nor were targeted analyses with a large data basis possible. In consequence forecasts about a device’s failure probability couldn’t be made. For the manufacturer, this implied:

To reduce costs in the long-term while at the same time increasing the service quality even more, the company started an initiative to implement predictive maintenance and got Otofacto on board. The first step was to centrally record all the data from the transmitted log files and enrich it with information from the SAP system. Meanwhile, an analytical model was created that could be used to detect recurring patterns in the data.

Knowing that a machine will soon break down and preventing this at the right moment saves companies time and money.

To get this knowledge, analytical evaluation of the existing data is decisive.

Step-by-step, the project team implemented various software components to transform the usable information and evaluate it.

The implementation of the BI platform and the development of predictive maintenance triggered a ripple effect at the manufacturer. It became clear that even vast quantities of data could be analyzed with good performance. At the same time, the evaluations turned out to contain much more valuable information overall due to the broader data basis.

Further data sources were gradually connected to the platform and new possibilities for use are arising continuously.

All this saves the company license and maintenance fees. Furthermore, the manufacturer benefits from having data and analyses from the individual business departments available centrally and being able to exchange them within the organization. The Otofacto experts are supporting production operation and the further refinement of pattern recognition as well as the continuous expansion of the BI platform for the manufacturer. Significant cost savings and remarkable insights are already evident in many areas.

Building a central data pool requires very detailed planning and in-depth knowledge of the individual software components.

But those who take up the challenge profit very quickly from a wealth of insights.